Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Security is a fundamental aspect of the UOMI platform, designed to safeguard the integrity and reliability of its decentralized ecosystem. This section introduces the core security principles and mechanisms that ensure the platform's resilience against potential threats.

Through innovative technologies and protocols, UOMI addresses critical areas such as computation, data integrity, and Model updates Integrity, laying a solid foundation for a secure and trustworthy environment. Explore how these robust security measures work together to protect the platform and its participants.

UOMI blockchain implements a Proof of Stake (PoS) consensus mechanism utilizing BABE (Blind Assignment for Blockchain Extension) for block production. This consensus mechanism provides a secure, energy-efficient, and scalable foundation for the network.

Validators are selected based on their UOMI token stake in the network

Higher stakes increase the probability of being chosen to produce blocks

Participants must meet minimum staking requirements to become validators

Utilizes a slot-based block production system

Random validator selection for each slot using VRF (Verifiable Random Function)

Ensures predictable block times while maintaining security

Prevents manipulation through deterministic selection process

Validators must stake tokens as collateral

Malicious behavior results in stake slashing

Economic incentives align validator interests with network health

Significantly lower energy consumption compared to Proof of Work

Faster transaction finality

Reduced hardware requirements for participation

Supports high transaction throughput

Flexible validator set size

Efficient block production and validation process

Encourages broad participation through staking

Democratic validator selection process

Reduced barriers to entry compared to mining

Meet minimum stake requirements

Run validator node infrastructure

Maintain high uptime and performance

Follow network protocols and updates

Participate in network security without running infrastructure

Delegate tokens to trusted validators

Earn proportional rewards from validation activities

This consensus mechanism forms the foundation of UOMI's reliable and efficient blockchain infrastructure, enabling secure operation of AI models and agent interactions while maintaining network decentralization.

Agents are a core concept within the UOMI blockchain, representing autonomous entities that perform operations directly on the network. These agents are designed to leverage AI models to process data, execute computations, and interact with the blockchain ecosystem seamlessly. By integrating intelligent behavior with decentralized technology, agents become powerful tools capable of executing complex tasks efficiently.

An agent’s role extends beyond computation. It has the capability to trigger transactions on the blockchain, enabling it to manage digital assets, interact with smart contracts, and coordinate activities within the decentralized environment. This level of autonomy allows agents to operate as independent entities, driving the execution of on-chain operations without requiring constant human intervention.

Agents are tightly integrated into the UOMI infrastructure, functioning as the active participants that bridge AI capabilities with blockchain processes. They embody the intelligence of the network, processing inputs deterministically to ensure consistency across all nodes. By combining autonomy, intelligence, and decentralization, agents play a pivotal role in realizing UOMI’s vision of an AI-powered blockchain ecosystem.

TSS, or Threshold Signature Scheme, is a cryptographic protocol that enables a group of nodes to collaboratively generate a shared signature. This scheme ensures that the resulting signature is valid only if a predefined number of nodes (known as the threshold) participate in the signing process.

Shared Public Key: The group of nodes collectively holds a shared public key.

Private Keys: Each node possesses its own private key, which is used in the signature generation process.

Threshold: A predefined number of nodes must participate in generating the signature. If the number of participating nodes meets or exceeds this threshold, the signature is considered valid.

Initialization:

A shared public key is established for the group.

Each node generates its private key, which remains secret.

Signature Generation:

In the UOMI blockchain, TSS is integrated to provide agents with their own wallets. This allows agents to perform onchain transactions during their execution. The use of TSS enhances security and ensures that transactions are only authorized when a sufficient number of agents agree, thus maintaining the integrity of the process.

Developers can create and deploy smart contracts on Uomi Network using various programming languages, including Solidity, compatible with Ethereum smart contracts, and ink!, a Rust-based smart contract language for the Polkadot ecosystem. This compatibility ensures a seamless transition for developers from other blockchain ecosystems, fostering interoperability and encouraging the adoption of the Uomi Network.

Uomi runtimes are based on Substrate and incorporate pallet-contracts, a sandboxed environment used to deploy and execute WebAssembly (Wasm) smart contracts. Any language that compiles to Wasm can be deployed and run on this Wasm Virtual Machine, provided the code is compatible with the pallet-contracts .

To avoid unnecessary complexity and writing boilerplate code, the most appropriate method of building involves the use of an eDSL specifically targeting pallet-contracts, such as ink! (based on Rust) or ask! (based on AssemblyScript), among others as the ecosystem grows.

After compilation, a Wasm blob can be deployed and stored on-chain.

The Uomi EVM implementation is based on the Substrate Pallet-EVM, providing a full Rust-based EVM implementation. Smart contracts on Uomi EVM can be implemented using Solidity, Vyper, or any other language capable of compiling smart contracts to EVM-compatible bytecode. Pallet-EVM aims to provide a low-friction and secure environment for the development, testing, and execution of smart contracts that is compatible with the existing Ethereum developer toolchain.

UOMI is a Layer 1 blockchain specialized for:

Executing all types of smart contracts

Providing a hybrid EVM + Wasm environment with interoperability

Seamlessly aggregating features and assets within its ecosystem

Running AI-driven economic agents

UOMI is built with Substrate, inheriting many of its powerful features, including its account system. At its core, UOMI leverages Substrate's technology stack while operating as an independent Layer 1 blockchain.

At a high level, a UOMI node provides a layered environment with two main elements:

An outer node that handles:

Network activity and peer discovery

Transaction request management

Consensus mechanisms

FRAME (Framework for Runtime Aggregation of Modularized Entities) encompasses numerous modules and support libraries that simplify runtime development. In Substrate, these modules (called pallets) offer customizable business logic for different use cases and features that you might want to include in your runtime.

The framework provides pallets for common blockchain functionalities such as:

Staking

Consensus

Governance

Uomi-engine

UOMI provides a robust environment for smart contract execution through two main Virtual Machine (VM) implementations:

The Ethereum Virtual Machine (EVM) is a virtual computer with components that enable network participants to store data and agree on the state of that data. In UOMI, the core responsibilities of the EVM are implemented in the EVM pallet, which is responsible for executing Ethereum contract bytecode written in high-level languages like Solidity. UOMI EVM provides a fully Ethereum Virtual Machine compatible platform, which you can learn more about in the .

UOMI includes the pallet-contracts module for WebAssembly (Wasm) smart contracts. This implementation supports the execution of Wasm-based smart contracts, providing an alternative to traditional EVM-based contracts. The Wasm environment offers several advantages, including:

Improved performance

Enhanced security features

Greater language flexibility

You can learn more about Wasm contract development in the .

One of UOMI's key features is its hybrid approach to smart contract execution, allowing developers to choose between EVM and Wasm environments based on their specific needs. This flexibility enables:

Cross-contract interactions between EVM and Wasm

Optimization of different use cases

Broader ecosystem compatibility

UOMI is an interoperable blockchain platform designed for the Polkadot and Ethereum ecosystems, supporting both Wasm and EVM smart contracts. This guide will help you quickly get up to speed with the core components of our platform.

Whether you're managing accounts, deploying smart contracts, you'll find all the essential information here to start building with UOMI. Dive into a new era of decentralized, AI-driven economic agents and explore the limitless possibilities of our innovative blockchain ecosystem!

When a transaction or message requires signing, nodes collaborate by using their private keys.

The signature is only produced if the number of contributing nodes meets the threshold.

Validation:

The generated signature can be verified using the shared public key.

This ensures that the signature is authentic and originates from the group.

RPC call responses

A runtime containing all the business logic for executing the state transition function of the blockchain. For more detailed information, see the Infrastructure and Security documentation.

Other core activities

A Uomi Network account is composed of a private key and a public key. The public key, often referred to as the account address, is publicly accessible. The private key, on the other hand, is essential for accessing and managing the associated account. While anyone can send tokens to your public address, only the holder of the private key can access and control those tokens. Consequently, safeguarding your private key is of utmost importance.

Uomi Network is compatible with two types of virtual machines, Wasm VM and EVM, and thus employs two different account formats.

Uomi is developed using Substrate, a framework for building blockchains, and it utilizes Substrate accounts. In Substrate-based chains, the public key is used to derive one or more public addresses. Instead of directly using the public key, Substrate enables the generation of multiple addresses and address formats for an account. This means that a single public-private key pair can be used to derive various addresses for different Substrate chains.

The private key is a cryptographically secure sequence of randomly generated numbers. For easier human readability, the private key can generate a random sequence of words known as a secret seed phrase or mnemonic.

Substrate-based chains, including Uomi, use the ss58 address format. This format is a variant of Bitcoin's Base-58-check, with some modifications. Notably, ss58 includes an address type prefix to identify the address as belonging to a specific network.

On the Uomi EVM side, Ethereum-style addresses (H160 format) are supported within the Substrate-based chain. These addresses are 42 hex characters long. Each Ethereum-style address corresponds to a private key, which can be used to sign transactions on the Ethereum side of the chain. Additionally, these addresses are mapped to a storage slot within the Substrate Balance pallet, linking them to Substrate-style addresses (H256 format).

OPOC, or Optimistic Proof of Computation, is a mechanism designed to ensure the integrity and security of computational operations that occur outside the blockchain (offchain). This approach leverages both offchain and onchain elements to provide a tamper-proof system where certain operations are validated by multiple nodes to achieve consensus.

Offchain Operations: These are processes that occur outside the blockchain environment. While they offer scalability and speed, they are susceptible to tampering by malicious nodes.

Onchain Operations: These are processes executed directly on the blockchain, ensuring tamper-proof and immutable records.

User Interaction

A user initiates a request by calling a function in a Solidity contract, providing necessary parameters such as NFT_ID, INPUT_DATA, and INPUT_FILE_CID.

Request Initialization

Wasm and IPFS File Verification:

The system ensures the availability and validity of files required for the execution (the wasm of the AI Agent and the input file).

It checks the status of these files through the IPFS pallet, verifying their usability and expiration.

Node Assignment and Execution:

Level 0: A single node executes the request.

Level 1: Multiple nodes are involved to achieve a higher consensus.

Level 2: Additional nodes are engaged if discrepancies are found, ensuring a majority consensus.

The final result is stored in the Outputs storage.

Nodes are rewarded based on their participation and accuracy, with penalties for nodes in the OpocBlacklist or those with timeouts and errors.

Nodes continually monitor and execute assigned tasks, ensuring timely processing and consensus.

Results are stored, and timeouts are managed to maintain system efficiency.

AI Models are a fundamental component of the UOMI blockchain infrastructure, enabling the execution of complex operations that power the entire ecosystem. These models encompass a wide variety of architectures supporting different tasks, including:

Natural language processing

Image generation

Data analysis

Other AI-driven applications

The direct integration of AI models into the chain's infrastructure provides a seamless platform for autonomous and intelligent computations. Key features include:

Open Source: The models used within the UOMI ecosystem are primarily open-source, ensuring transparency and adaptability.

Deterministic Execution: To maintain consistency across the network, models are managed deterministically, ensuring that every node:

Processes the same input data

Produces identical outputs

AI models in UOMI are responsible for driving the intelligence behind agent interactions. Their role includes:

Processing input data

Generating meaningful outputs

Performing necessary computations for on-chain operations

This tight integration between AI and blockchain creates a robust environment where autonomous agents can operate effectively, leveraging the power of advanced AI technologies across diverse use cases.

The UOMI infrastructure is the backbone of our platform, designed to support the seamless integration of AI-driven agents within a decentralized blockchain environment. This section provides an overview of the key components that power UOMI, enabling robust, secure, and efficient operations for AI models and agents.

Explore how Nodes execute AI computations, delve into the various Models utilized—ranging from large language models (LLMs) to image generation frameworks—and discover how IPFS facilitates decentralized storage. Additionally, learn about the role of Agents in driving autonomous, intelligent economic interactions across the UOMI ecosystem.

The success of the UOMI platform relies on the contributions of several key participants:

The contract generates a unique REQUEST_ID and invokes a specific function from a precompiled contract, passing critical parameters like REQUEST_ID and the other user parameters.

Data Verification

The system checks the input data and retrieves associated NFT information.

It stores the request details in the Inputs storage and logs an event indicating the request has been accepted.

Consensus Levels

Depending on the NFT specifications, the system start the assignment of the execution to a random node.

Nodes are assigned to process requests based on current load and execution requirements.

The system monitors the execution and consensus among nodes, escalating to higher levels of OPOC if discrepancies arise.

Guaranteed Reliability: This approach ensures reliability and trust in the operations performed by agents.

Developer: The individual responsible for developing and deploying AI-AGENTS, bringing intelligence and functionality to the platform.

Staker: Provides the hardware required to run the blockchain nodes, ensuring the stability and performance of the network.

Delegator/Nominator: Supplies tokens to support and secure Staker, helping to maintain the network’s integrity.

User: Uses the blockchain to run AI-AGENTS, benefiting from the decentralized, AI-powered services that UOMI enables.

Together, these participants contribute to building a robust, scalable, and interoperable ecosystem that pushes the boundaries of decentralized AI applications.

The Wasm section covers the Wasm stack on Astar/Shiden, some more advanced topics, and contains a few tutorials to help you build and deploy Wasm smart contracts.

The IPFS pallet provides decentralized storage capabilities to the blockchain through integration with the InterPlanetary File System (IPFS). It manages pinning, unpinning, and retrieval of files while ensuring data persistence and availability through validator consensus.

Persistent Pins: Files that remain pinned indefinitely

Temporary Pins: Files with an expiration block number

Validator-based Consensus: Content becomes available when pinned by majority of validators

Agent Pins

Permanent pins associated with NFT IDs

Used for storing agent-related data

Can be updated with new CIDs

Temporary Pins

Time-limited storage

Minimum duration of 28,800 blocks (approximately 1 day)

Automatically unpinned after expiration

Validator Pinning:

Validators run offchain workers to process pin requests

Each validator maintains its own IPFS node

Files become "usable" when pinned by majority (50% + 1) of validators

Pin Request:

User submits CID for pinning

System records pin request with expiration time

Processing:

ExpirationBlockNumber: When the pin expires (0 for permanent pins)

UsableFromBlockNumber: When content becomes available (post-majority pinning)

Requires minimum duration (28,800 blocks)

Creates temporary pin with expiration

Triggers validator pinning process

Creates permanent pin associated with NFT

Updates existing pins if necessary

Maintains single CID per NFT ID

Checks pin status and expiration

Verifies content is "usable"

Returns file content if available

Each block processes pin operations

Updates usable content status

Removes expired pins

Updates pin status based on validator consensus

Runs after each block

Processes pending pin requests

Updates local IPFS node

Submits pin status updates

The SR25519 precompile provides an interface to verify a message signed with Schnorr sr25519 algorithm.

Web3 Foundation has implemented a Schnorr signature library using the more secure Ristretto compression over the Curve25519 in the Schnorrkel repository. Schnorrkel implements related protocols on top of this curve compression such as HDKD, MuSig, and a verifiable random function (VRF). It also includes various minor improvements such as the hashing scheme STROBE that can theoretically process huge amounts of data with only one call across the Wasm boundary.

The implementation of Schnorr signatures used in Polkadot that uses Schnorrkel protocols over a Ristretto compression of Curve25519, is known as sr25519.

For see the Polkadot Wiki.

The verify function can be used to check that public_key was used to generate signature for message

The Substrate ECDSA precompile provides an interface to verify a message signed with ECDSA algorithm.

Most cryptocurrencies, including Bitcoin and Ethereum, currently use ECDSA signatures on the secp256k1 curve. This curve is considered much more secure than NIST curves, which have possible backdoors from the NSA. The Curve25519 is considered possibly even more secure than this one and allows for easier implementation of Schnorr signatures. A recent patent expiration on it has made it the preferred choice for use in Polkadot.

For see the Polkadot Wiki.

The verify

UOMI, being built on Substrate and supporting dual Virtual Machine environments, utilizes a unique address system. The blockchain implements the SS58 address format, which is derived from Bitcoin's Base-58-check encoding with specific modifications to support network-specific addressing.

A key feature of the SS58 format is its network identifier prefix, which ensures addresses are uniquely associated with the UOMI network.

Due to UOMI's support for both EVM and Wasm smart contracts, the network operates with two distinct types of addresses:

Uses 256 bits

Based on Substrate's SS58 encoding

Used for native blockchain operations and Wasm contracts

Uses 160 bits

Begins with "0x" prefix

Compatible with Ethereum-style operations

Used for EVM contract interactions

This dual-address system allows UOMI to maintain compatibility with both ecosystems while preserving each environment's unique features and capabilities.

Offchain workers detect new pin requests

Validators attempt to pin the content

System tracks pinning status per validator

Activation:

Content becomes "usable" once majority threshold is reached

System updates UsableFromBlockNumber to avoid using the file on requests received on the chain before the availability of the file

Expiration:

System tracks expiration through ExpirationBlockNumber

Automatic cleanup of expired pins

Validators remove expired content

function verify(

bytes32 public_key,

bytes calldata signature,

bytes calldata message

) external view returns (bool);public_keysignaturemessage function verify(

bytes32 public_key,

bytes calldata signature,

bytes calldata message

) external view returns (bool);NodesPins: (CID, AccountId) => bool

AgentsPins: NFTId => CID

CidsStatus: CID => (ExpirationBlockNumber, UsableFromBlockNumber)pin_file(origin, cid: CID, duration: BlockNumber)pin_agent(origin, cid: CID, nft_id: NFTId)get_file(cid: CID) => Result<Vec<u8>>Nodes are fundamental components of the UOMI ecosystem, serving multiple critical functions including blockchain maintenance, AI computation execution, and system-wide service integration. Each node type is optimized for specific roles within the network architecture.

Core Functions:

Maintains complete blockchain copy

Performs comprehensive transaction verification

Facilitates network transaction propagation

Key Features:

Stores complete historical state

Enables historical block queries

Supports data analytics and explorers

Capabilities:

Operates full node functionality

Participates in consensus

Implements intelligent state pruning

Uomi-node Service

Substrate-based blockchain runtime

Transaction processing engine

State management system

The AI-AGENT system interfaces with node services through:

Secure proxy functions

Protected communication channels

Resource allocation controls

Default P2P port: 30333

Default RPC port: 9944

Default WS port: 9944

Default Prometheus port: 9615

Network Security

Firewall configuration

Port access control

DDoS protection

Deployment

Use dedicated hardware

Implement monitoring

Regular maintenance

This comprehensive guide provides the foundation for understanding and implementing UOMI network nodes. For specific setup instructions, refer to our node deployment documentation.

This section will guide you through the process of attaching real-world information to your Uomi account.

When you are a validator, you care for your credibility and recognizability as seen by potential nominators. One way of ensuring the trustworthiness of your account is attaching some real-world information to your account, including the display name, email, website, etc.

Go on the polkadot.js app, connect your validator wallet (created as first step in Spin up a validator).

Go to Developer > extrinsics.

Select your validator account and identity > setIdentity as extrinsic type

The default info are:

You don't need to fill up everything, just leave as "None" whatever you don't want to share.

When you chose something to add, click "None" and select "Raw", write the information

Then click sign Sumbit transaction and send

By clicking "Add new item" you can add custom information (up to 100 custom information), filling up firstly the name, then the data

ink! is a Rust-based eDSL (embedded Domain-Specific Language) developed by Parity Technologies for writing smart contracts on Substrate's pallet-contracts.

💡 Why ink!? ink! is currently the most widely supported eDSL for Substrate-based smart contracts, with strong backing from both Parity and the builder community.

WASP (WebAssembly Agent System Platform) is a comprehensive development environment created by the UOMI team for building, testing, and deploying WebAssembly agents. It provides a simulation environment that mirrors the UOMI blockchain's behavior, allowing developers to create and test agents in a controlled environment before deployment.

Running a full node on Uomi allows you to connect to the network, sync with a bootnode, obtain local access to RPC endpoints, author blocks, and more.

Different from archive node, a full node discards all finalized blocks older than configured number of blocks (256 blocks by default). A full node occupies less storage space than an archive node because of pruning.

A full node may eventually be able to rebuild the entire chain with no additional information, and become an archive node, but at the time of writing, this is not implemented. If you need to query historical blocks past what you pruned, you need to purge your database and resync your node starting in archive mode. Alternatively you can use a backup or snapshot of a trusted source to avoid needing to sync from genesis with the network, and only need the blocks past that snapshot. (reference: )

If your node need to provide old historical blocks' data, please consider to use Archive node instead.

IPFS (InterPlanetary File System) serves as the decentralized file system for the UOMI blockchain. It is an integral component of the platform, enabling seamless storage and retrieval of files required for the execution of AI operations.

Decentralized Storage: IPFS ensures that files are stored in a distributed network, eliminating single points of failure and improving data availability.

Efficient File Access: AI agents require access to various files, including models and data inputs, to perform their computations. IPFS provides a robust mechanism to store and retrieve these files securely.

ink! provides developers with powerful tools and features:

Write smart contracts using idiomatic Rust code

Leverage ink! macros and attributes via #[ink::contract]

Utilize trait definitions and implementations

Create upgradeable contracts through delegate calls

Interact with Substrate pallets using Chain Extensions

Perform off-chain testing with #[ink(test)]

Procedural macros for simplified development

Comprehensive crate ecosystem

Reduced boilerplate code requirements

⚙️ Getting Started For installation instructions, visit the ink! Environment section.

IPFS acts as the backbone for managing the data lifecycle of AI models and inputs used by agents. By leveraging IPFS, the UOMI blockchain ensures seamless access to files for computations while maintaining their immutability to preserve data integrity. Additionally, the distributed nature of IPFS optimizes performance by efficiently spreading data across the network.

1

Qwen2.5

Qwen/Qwen2.5-32B-Instruct-GPTQ-Int4

Language Model

32 Billion

The Qwen2.5 model represents the latest advancement in large language model technology. Developed by Alibaba's Qwen team, this model brings several key innovations:

High-Performance Instruction Following: Specifically designed to understand and execute complex instructions with remarkable accuracy.

Efficient Quantization: Utilizing GPTQ (Generative Pretrained Transformer Quantization) with INT4 precision, the model maintains high performance while reducing computational requirements.

Broad Capability Range: Excels in tasks such as:

Natural language understanding

Text generation

Contextual reasoning

Multilingual communication

Technical Specifications:

Model Size: 32 Billion parameters

Quantization: INT4

Optimization: GPTQ

Primary Use: Instruction-based AI interactions

Last Updated: February 2025

Supports network stability and decentralization

Maintains network transparency

Optimizes storage efficiency

Executes Agents

Network communication protocol

AI Service

Dedicated computation engine

Model execution environment

Agent interaction interface

Resource management system

1 Gbps

-

Validator

8+ cores

16GB+

100GB+

1 Gbps

2 x RTX 4090+

Secure communication protocols

System Security

Regular updates

Access control

Resource isolation

Monitoring and alerting

Data Security

State encryption

Secure key management

Backup procedures

Recovery protocols

Performance optimization

Operation

Regular backups

Update management

Resource monitoring

Performance tuning

Maintenance

Regular updates

Security patches

Performance monitoring

Capacity planning

Full

4+ cores

8GB+

100GB+

1 Gbps

-

Archive

8+ cores

16GB+

500GB+

Twitter link/username

Display

Name displayed on-chain when validating blocks

Legal

Legal name

Web

Website

Riot

Element username

E-mail address

Image

Validator image

To deploy contracts to UOMI networks, you'll need to export your private key from MetaMask:

Open MetaMask

Select your account

Click the three dots menu

Go to "Account Details"

Select "Export Private Key"

Enter your password to confirm

You'll get a 64-character hex string like:

2. Store Your Private Key

Create private.json in your project root:

⚠️ Security Warning Never commit your private key to version control. Add

private.jsonto your.gitignorefile.

3. Configure Networks

Modify your hardhat.config.js:

4. Deploy Your Contract

Install the HD Wallet Provider:

Modify your truffle-config.js:

Deploy to your chosen network:

💡 Note If no network is specified, Truffle will use the default development network.

/agent-template)Core Files

lib.rs: Core agent logic

utils.rs: Utility functions and blockchain interactions

Protected Functions

The utils.rs file contains essential offchain API functions that must not be modified. These are marked with a specific comment block:

These functions provide core functionality for:

Blockchain communication

Logging and debugging

Memory management

Security operations

⚠️ WARNING: The host directory simulates blockchain node behavior.

Modifying its contents may lead to inconsistent behavior between

development and production environments.WebAssembly-based agent execution

Blockchain node simulation

Built-in debugging capabilities

Frontend integration tools

Hot-reloading development environment

WASP implements a three-layer architecture:

Host Layer - Simulates blockchain node behavior

Agent Layer - Contains the WebAssembly agent code

Frontend Layer - Provides user interface and interaction

Requirements for running any node are similar to what we recommend for archive node. Read more about this here. Note that Full node requires less disk space. Hard Disk requirement for Archive node is not applied to Full nodes.

To set a full node, you need to specify the number of blocks to be pruned:

--pruning 1000 \60ed0dd24087f00faea4e2b556c74ebfa2f0e705f8169733b01530ce4c619883{

"privateKey": "YOUR_PRIVATE_KEY_HERE"

}const { privateKey } = require("./private.json");

module.exports = {

networks: {

// Finney Testnet

finney: {

url: "https://finney.uomi.ai",

chainId: XXX,

accounts: [privateKey],

},

}

};npx hardhat run --network finney scripts/deploy.jsnpm install @truffle/hdwallet-providerconst HDWalletProvider = require('@truffle/hdwallet-provider');

const { privateKey } = require('./private.json');

module.exports = {

networks: {

// Finney Testnet

finney: {

provider: () => new HDWalletProvider(

privateKey,

'https://finney.uomi.ai'

),

network_id: XXX,

},

}

};truffle migrate --network finney// ===========================================================

// =============== Offchain API, DO NOT MODIFY ===============

// ===========================================================Frontend (main.js) <-> Host Simulation <-> WebAssembly AgentCopywasp-project/

├── host/ # Blockchain node simulation

├── agent-template/ # Agent development environment

| └── src/

│ ├── lib/ # Core agent functionality

│ ├── utils/ # Utility functions

│

└── main.js # User interface The Finney testnet provides robust infrastructure through two dedicated RPC (Remote Procedure Call) endpoints. These endpoints serve as archive nodes, offering developers and users comprehensive access to the network's historical data and current state.

Complete historical state access

Full block history from genesis

State queries at any block height

Transaction receipt retrieval for all historical transactions

Both endpoints support the standard JSON-RPC methods including:

Ethereum JSON-RPC API (eth_*)

Net API (net_*)

Web3 API (web3_*)

Debug API (debug_*)

Load Balancing

Alternate between both endpoints for optimal performance

Implement retry logic with endpoint switching

Rate Limiting

For technical issues or questions:

Join our Discord community

Open a GitHub issue

Contact our developer support team

Chain ID: 4386

Block Time: 3s

Both endpoints are continuously monitored for:

Uptime

Response time

Sync status

Block height consistency

Embedded Domain-Specific Languages (eDSLs) are specialized programming tools that enhance blockchain and smart contract development. These tools operate within existing programming languages, providing developers with a more intuitive and efficient way to write code.

💡 Key Benefit EDSLs allow developers to write smart contracts at a higher level of abstraction, making code more readable, maintainable, and less prone to errors.

EDSLs offer several advantages for blockchain development:

More expressive and intuitive code writing

Built-in error checking mechanisms

Enhanced debugging capabilities

Specialized testing frameworks

For example, rather than using pure Rust for Wasm smart contracts, developers can use specialized Rust eDSLs designed specifically for blockchain development, making the code more natural and easier to maintain.

ℹ️ What is ink!? ink! is a Rust-based eDSL developed by Parity Technologies, specifically designed for Substrate's

pallet-contracts.

Key Features

Rust procedural macros support

Comprehensive crate ecosystem

Reduced boilerplate code

Direct integration with pallet-contracts API

Resources

🚧 Development Status ask! is a Polkadot treasury funded project currently under active development.

Overview

Framework for AssemblyScript developers

TypeScript-like syntax

Targets pallet-contracts for Wasm smart contracts

Resources

When selecting an eDSL for your project, consider:

Programming Language Familiarity

ink! for Rust developers

ask! for TypeScript/AssemblyScript developers

Project Requirements

📚 Learn More For detailed information about using these eDSLs, refer to their respective documentation and GitHub repositories.

An archive node stores the history of past blocks. Most of times, an archive node is used as RPC endpoint. RPC plays a vital role on our network: it connects users and dApps to the blockchain through WebSocket and HTTP endpoints.

DApp projects need to run their own RPC node as archive to the retrieve necessary blockchain data and not to rely on public infrastructure. Public endpoints respond slower because of the large amount of users connected and are rate limited.

CAUTION Be careful not to confuse with a full node that has a pruned database: a full node only stores the current state and most recent blocks (256 blocks by default) and uses much less storage space.

We maintain 2 different networks: the testnet Uomi Finney and the mainnet Uomi

The Uomi node needs different ports to run:

For all types of nodes, ports 30333 need to be opened for incoming traffic at the Firewall. Validator nodes should not expose WS and RPC ports to the public.

Using - run the node from binary file and set it up as systemd service

A validator plays an essential role in our network and is responsible for crucial tasks, including block production and transaction confirmation. A validator needs to maintain a high communication response capability to ensure the seamless operation of the Uomi ecosystem.

Validators maintain our ecosystem by collecting transactions from users and validating blocks securing the network.

Performance of the network depends directly on validators. To ensure optimal performance of the network, a slashing mechanism is implemented.

Validators are a key element of UOMI-ENGINE, they executes Agents when a request is made by an user, and save the output for the OPoC

To join the election process you must register for a validator and bond tokens, see Validator Requirements for details. When your node fits the parameters and checks all the boxes to become a validator, it will be added to the chain. Note: if your validator doesn’t produce blocks during two sessions (2h) it will be kicked out.

At every block you produced as a validator, rewards will automatically be transferred to your account. The reward includes block reward + fees + Agents fees.

A slashing mechanism is implemented on Uomi and Finney networks - a validator that doesn't produce blocks during two sessions (2 hours) will be slashed 1% of its total stake and kicked out of the active validator set. This slashing ensures the best block rate and prevents malicious actors from harming the network without financial consequences.

The Model Updates Integrity system ensures seamless and secure transitions between different AI model versions, maintaining the reliability of AI operations across the UOMI network. This process is critical for guaranteeing that AI agents run the appropriate model versions during updates, thereby safeguarding the consistency and accuracy of computations.

Two key on-chain storages are utilized to manage model updates:

AiModels:

Stores the list of valid AI models that agents can utilize.

Each model is identified by a unique UOMI_KEY and includes:

During the update process, the system ensures that nodes and agents operate on consistent model versions:

Version Identification:

At each block validation or after a set number of blocks, nodes identify the version used by the majority by reading from NodesVersions.

Model Transition:

Block 15: A request is added to the chain.

Block 16: The initial phase of computation begins, using model llama-2.0.0. The validator updates AiModels to switch to llama-2.1.0 from block 16, keeping llama-2.0.0 as the old model.

Block 18: The computation continues using the older model for requests initiated before block 16.

Nodes must be capable of running both the old and new models during transitions to ensure uninterrupted service and accuracy. This dual compatibility is essential to maintain consistent operations throughout the network update process.

UOMI's runtime is built on Substrate and incorporates pallet-contracts, providing a sandboxed environment for WebAssembly smart contracts. While any language that compiles to Wasm can potentially be used, the code must be compatible with the pallet-contracts API.

💡 Tip For efficient development, it's recommended to use an eDSL (embedded Domain-Specific Language) targeting

pallet-contracts, such as:

A precompile is a common functionality used in smart contracts that has been compiled in advance, so Ethereum nodes can run it more efficiently. From a contract's perspective, this is a single command like an opcode. The Frontier EVM used on Uomi network provides several useful precompiled contracts. These contracts are implemented in our ecosystem as a native implementation. The precompiled contracts 0x01 through 0x08 are the same as those in Ethereum (see list below). Additionally, Uomi implements precompiled contracts that support new Uomi features.

Smart contracts on UOMI are programs that run on the Ethereum Virtual Machine (EVM). These self-executing contracts contain code and data that live at a specific address on the blockchain. Being EVM-compatible, UOMI supports the same smart contract functionality as Ethereum, allowing developers to write and deploy contracts using familiar tools and languages.

Validator staking requirements

Bond: some UOMI tokens (not already defined)

Meet hardware requirements

If your node stops producing blocks for 1 session, your node will be kicked out of the active set and 1% of the bonded funds will be slashed. Running a node with low performance can lead to skipping blocks which may result in being kicked out of the active set.

llama-2.0.0).USABLE_FROM_BLOCK_NUMBER: The block number from which the model becomes usable.

OLD_LOCAL_NAME: The previous version of the model used before the update.

NodesVersions:

Contains the version details of each node.

Nodes periodically update this storage with their current version via the offchain_worker.

AiModels with the corresponding LOCAL_NAME, USABLE_FROM_BLOCK_NUMBER, and OLD_LOCAL_NAME.This allows for a smooth transition where agents can continue using the older model until the majority has switched to the new version.

Block 20: New requests use the updated model llama-2.1.0, as the transition has been completed.

A validator can deploy its node on a local or remote server. You can choose your preferred provider for dedicated servers and operating system. Generally speaking, we recommand you to select a provider/server in your region, this will increase decentralization of the network. You can choose your preferred operating system, though we highly recommend Linux.

Hardware requirements

Use the charts below to find the basic configuration, which guarantees that all blocks can process in time. If the hardware doesn't meet these requirements, there is a high chance it will malfunction and you risk be automatically kicked out and slashed from the active set.

Validator are the nodes which require the most powerful and fast machine, because they only have a very short time frame to assemble and validate it. To run a validator, it is absolutely necessary to use a CPU of minimum 4 Ghz per core, a NVMe SSD disk (SATA SSD are not suitable for validator because they are too slow) and 2 x RTX 4090 (or similar)

System

Ubuntu 22.04

CPU

12 cores - minimum 4 Ghz per core

Memory

64 GB

Hard Disk

1 TB SSD NVMe

GPU

2 x RTX 4090 (or equivalent)

Smart contract complexity

Performance needs

Team expertise

Development Status

ink! is production-ready

ask! is under development

Testnet

Finney

$UOMI

Mainnet

Uomi

$UOMI

System

Ubuntu 22.04

CPU

8 cores

Memory

16 GB

Hard Disk

500 GB SSD (NVMe preferable)

P2P

30333

--port

RPC

9944

--rpc-port

Prometheus

9615

--prometheus-port

Respect rate limits to ensure fair usage

Implement appropriate caching strategies

Error Handling

Always implement proper error handling

Monitor response times and implement timeouts

https://finney.uomi.ai

Primary Archive Node

Active

https://finney2.uomi.ai

Secondary Archive Node

Active

Replays a transaction in the exact manner it was executed on the network.

Optional parameters:

disableStorage: (default: false) Disables storage capture

disableMemory: (default: false) Disables memory capture

disableStack: (default: false) Disables stack capture

Returns a full stack trace of all invoked opcodes for all transactions in a block.

Variants:

debug_traceBlockByHash

debug_traceBlockByNumber

Executes an eth-call-like operation within the context of a given block.

Filters and retrieves trace data based on specific criteria.

Parameters:

fromBlock: Starting block number

toBlock: Ending block number

fromAddress: Filter transactions from these addresses

toAddress: Filter transactions to these addresses

after: Trace offset (default: 0)

count: Number of traces to return

⚠️ Important Limits

Maximum 500 trace entries per request

Trace cache duration: 300 seconds

To access these debugging features, you need to run a node with specific debug flags enabled.

Check the transaction pool status:

💡 Note The

txpoolAPI requires the--ethapi=txpoolflag when starting the node.

Node Configuration

Enable only the debug features you need

Consider memory usage when setting cache sizes

Monitor node performance with tracing enabled

API Usage

Use specific filters to limit data returned

Consider pagination for large trace requests

Cache commonly requested trace data

Request Timeout: Reduce the trace range or add more filters

Memory Issues: Adjust cache size and duration

Missing Data: Verify node sync status and cache duration

ECRecover

0x0000000000000000000000000000000000000001

Sha256

0x0000000000000000000000000000000000000002

Ripemd160

0x0000000000000000000000000000000000000003

Identity

0x0000000000000000000000000000000000000004

Modexp

0x0000000000000000000000000000000000000005

Bn128Add

0x0000000000000000000000000000000000000006

Bn128Mul

0x0000000000000000000000000000000000000007

Bn128Pairing

0x0000000000000000000000000000000000000008

Sr25519

0x0000000000000000000000000000000000005002

SubstrateECDSA

0x0000000000000000000000000000000000005003

Assets-erc20

ASSET_PRECOMPILE_ADDRESS_PREFIX

UomiEngine

0x00000000000000000000000000000000756f6D69

The interface descriptions for these precompiles can be found in the precompiles folder: Uomi repo. The Addresses can be checked in the Uomi repo for each runtime in precompile.rs files.

Here we'll demonstrate a simple smart contract that can interact with Sr25519 precompile

const Web3 = require('web3');

const web3 = new Web3('https://finney.uomi.ai');const { ethers } = require('ethers');

const provider = new ethers.providers.JsonRpcProvider('https://finney.uomi.ai');curl http://127.0.0.1:9944 -H "Content-Type:application/json;charset=utf-8" -d \

'{

"jsonrpc":"2.0",

"id":1,

"method":"debug_traceTransaction",

"params": ["YOUR-TRANSACTION-HASH"]

}'curl http://127.0.0.1:9944 -H "Content-Type:application/json;charset=utf-8" -d \

'{

"jsonrpc":"2.0",

"id":1,

"method":"trace_filter",

"params":[{

"fromBlock":"4142700",

"toBlock":"4142800",

"toAddress":["0xYOUR-ADDRESS"],

"after":0,

"count":20

}]

}'--ethapi=debug # Enables debug_traceTransaction

--ethapi=trace # Enables trace_filter

--ethapi=txpool # Enables transaction pool APIs

--runtime-cache-size 64--ethapi-trace-max-count <number> # Maximum trace entries

--ethapi-trace-cache-duration <seconds> # Cache durationcurl http://127.0.0.1:9944 -H "Content-Type:application/json;charset=utf-8" -d \

'{

"jsonrpc":"2.0",

"id":1,

"method":"txpool_status",

"params":[]

}'// SPDX-License-Identifier: GPL-3.0-or-later

pragma solidity ^0.8.0;

contract Sr25519Caller {

address constant precompileAddress = 0x0000000000000000000000000000000000005002;

function verify(bytes32 publicKey, bytes memory signature, bytes memory message) public view returns (bool) {

(bool success, bytes memory result) = precompileAddress.staticcall(

abi.encodeWithSignature("verify(bytes32,bytes,bytes)", publicKey, signature, message)

);

require(success, "Failed to call precompile");

return abi.decode(result, (bool));

}

}ink! (Rust-based)

ask! (AssemblyScript-based)

The pallet-contracts uses the wasmi interpreter to execute Wasm smart contracts.

ℹ️ Note While faster JIT interpreters like

wasmtimeexist,wasmiis chosen for its higher degree of interpretation correctness, which is crucial for the untrusted environment of smart contracts.

Contract deployment follows a two-step process:

Code Upload

Upload Wasm contract code to the blockchain

Each contract receives a unique code_hash identifier

Contract Instantiation

Create contract address and storage

Anyone can instantiate a contract using its code_hash

Benefits of Two-Step Deployment

Storage Efficiency

Multiple instances can share the same code

Reduces on-chain storage requirements

Particularly efficient for standard tokens (like PSP22 & PSP34)

Flexible Deployment

Create new instances from existing contracts

Use code_hash for contract instantiation within other contracts

Single upload for standard contracts, reducing gas costs

Upgradability

Update contract code while preserving storage and balances

Use set_code_hash to replace contract code at specific addresses

🔧 Available Tools

Polkadot.js API for blockchain interaction via JavaScript

contracts-ui web application for contract interaction

Architecture

Ethereum clients

Substrate

Runtime Environment

EVM

Wasm pallet-contract + EVM frontier

Gas Model

Fixed price per instruction

Weight + storage fees + loading fees

Smart Contract DSLs

Solidity and Vyper

ink! (Rust) and ask! (AssemblyScript)

pallet-contracts Rust Documentation

pallet-contracts GitHub Repository

Polkadot.js API Documentation

📚 Further Reading For more detailed information about

pallet-contracts, visit the Rust docs or GitHub repository.

The native fee calculation follows the standard Substrate model:

Where:

Length Fee: Proportional to transaction byte size

Base Fee: Fixed cost per transaction

Weight Fee: Computational resources cost

Adjustment: Dynamic scaling factor based on network congestion

Tip: Optional priority payment

The fee adjustment mechanism ensures network stability by:

Scaling fees based on block space utilization

Implementing surge pricing during high congestion

Maintaining predictable base costs for standard operations

Based on the provided pallet code, UOMI implements EIP-1559 style fee calculation:

Base Fee Adjustment:

Elasticity Mechanism:

Adjusts base fee according to block utilization

Implements upper and lower bounds

Maintains target block utilization

The base fee adjusts according to network conditions:

Fees automatically adjust based on block fullness

Target block utilization maintained through elasticity

Smooth fee transitions prevent sudden spikes

For Users:

Predictable base fees

Optional priority fees for faster inclusion

Protection against fee spikes

For Validators:

Stable reward structure

Additional incentives during high demand

Protection against spam attacks

Smart contracts on UOMI are primarily written in Solidity, the most widely used programming language for EVM development. Solidity is:

Object-oriented and high-level

Specifically designed for smart contracts

Similar to JavaScript/C++ in syntax

Statically typed

💡 Note While Solidity is the most common choice, you can also use other EVM-compatible languages like Vyper.

To start developing smart contracts on UOMI, you'll need:

Browser extension for interacting with EVM chains

Manages your accounts and transactions

Connects dApps to the blockchain

Solidity Compiler: Converts Solidity code to EVM bytecode

Development Framework: Hardhat, Truffle, or Foundry

Code Editor: VS Code with Solidity extensions recommended

State Variables

Permanently stored in contract storage

Represent the contract's state

Functions

Execute contract logic

Can be public, private, internal, or external

Can modify state or be view/pure

Events

Log important changes

Can be monitored by applications

You can interact with smart contracts through:

MetaMask

Send transactions

Manage accounts

Connect to dApps

Web3 Libraries

ethers.js

web3.js

Block Explorers

View transactions

Verify contracts

Monitor events

Set Up MetaMask

Install the extension

Create or import an account

Add UOMI network

Get Test Tokens

Use the

Required for deployment and testing

Choose Development Tools

Install development framework

Set up your IDE

Configure network settings

Ready to start developing? Check out:

This guide will help you set up your environment for ink! and Wasm smart contract development in UOMI.

ℹ️ Note Before proceeding, make sure your system meets the requirements for Rust development.

ink! is a Rust-based eDSL (embedded Domain Specific Language) developed by Parity Technologies. It's specifically designed for creating smart contracts that work with Substrate's pallet-contracts.

Rather than creating a new programming language, ink! adapts Rust's capabilities for smart contract development.

💡 Tip Want to learn more about why ink! is a great choice for smart contract development? Check out the detailed benefits here.

Curious about the choice of WebAssembly for smart contracts? Find comprehensive

and Cargo are essential prerequisites for Wasm smart contract development.

Linux and macOS

Windows

Visit the and follow the Windows installation instructions.

Set up your Rust environment with these commands:

⚠️ Warning

Due to a bug in

cargo-contract, building contracts with rust nightly 1.70.0 or higher will fail. It is advised to use rustc v1.69.0 or older until the issue is resolved fromcargo-contractside. For better dev experience it is advised to create a in the root of your project directory with following values.See more

The primary tool you'll need is cargo-contract, a CLI tool for managing WebAssembly smart contracts.

Prerequisites

First, install binaryen for WebAssembly bytecode optimization:

Additional Dependencies

Install required linking tools:

Installing cargo-contract

💡 Tip Use

--forceto ensure you get the latest version. For a specific version, add--version X.X.X

Example for specific version:

Explore available commands with:

🔧 Alternative Setup Skip manual installation by using our pre-configured development container.

Find detailed instructions for using our dev container in the swanky-dev-container Github repository.

UOMI provides a set of high-level API functions that wrap the low-level WebAssembly calls, making it easy to develop agents without dealing with memory management and unsafe code. These functions are designed to be safe, efficient, and easy to use.

log(message: &str)

Logs a message to the console for debugging purposes.

Example:

read_input() -> Vec<u8>

Reads the input data provided to the agent.

Example:

save_output(data: &[u8])

Saves the agent's output data.

Example:

get_input_file_service() -> Vec<u8>

Retrieves the content of an input file.

Example:

get_cid_file_service(cid: Vec<u8>) -> Vec<u8>

Retrieves a file from IPFS using its CID.

Example:

prepare_request(body: &str) -> Vec<u8>

Prepares a request body for AI model interaction.

call_ai_service(model: i32, content: Vec<u8>) -> Vec<u8>

Calls an AI model with the prepared request. Model can be found on the

Example:

Minimize AI Calls

Use efficient prompts

Optimize Data Handling

Process data in appropriate chunks

Remember that these functions are part of the protected API and should not be modified. They provide a safe interface to interact with the UOMI blockchain and AI capabilities.

XC20 assets, created by the team, maintains compatibility between the EVM and Substrate framework that powers Polkadot, via precompiles — a set of built-in smart contracts made to look like ERC20s. Calling functions on an XC20 will invoke underlying Substrate functionality, which may be instructions for transferring tokens to another chain, or to send them to another local address. This compatibility layer connects the world of EVM and smart contracts to advanced Substrate-based interoperability scenarios.

⚠️ Production Warning ask! eDSL currently has several limitations and issues being actively addressed. It is not recommended for production environments. Consider using ink! for production contracts.

ask! is a framework that enables AssemblyScript developers to write Wasm smart contracts for pallet-contracts. With TypeScript-like syntax, it makes smart contract development accessible to JavaScript/TypeScript developers.

Fee = Length Fee + Base Fee + (Weight Fee × Adjustment) + TipFee = Gas Used × (Base Fee Per Gas + Priority Fee Per Gas)let weight_used = Permill::from_rational(

weight.total().ref_time(),

max_weight.ref_time()

).clamp(lower, upper);if usage > target {

// Increase base fee when above target utilization

let coef = Permill::from_parts(

(usage.deconstruct() - target.deconstruct()) * 2u32

);

} else if usage < target {

// Decrease base fee when below target utilization

let coef = Permill::from_parts(

(target.deconstruct() - usage.deconstruct()) * 2u32

);

}Network Name: UOMI Finney Testnet

RPC URL: https://finney.uomi.ai

Chain ID: 4386

Currency Symbol: UOMI// SPDX-License-Identifier: MIT

pragma solidity ^0.8.19;

contract MyContract {

// State variables

uint256 public value;

// Events

event ValueChanged(uint256 newValue);

// Constructor

constructor() {

value = 0;

}

// Functions

function setValue(uint256 newValue) public {

value = newValue;

emit ValueChanged(newValue);

}

}Standards

EIPs

PSPs

Avoid unnecessary copies

Use efficient data structures

Smart Logging

Remove logs for production agent

Avoid logging sensitive data

Basic understanding of TypeScript/JavaScript

Familiarity with package managers (yarn/npm)

1. Storage

2. Contract Methods

3. Events

This generates:

flipper.optimized.wasm: Compiled WebAssembly code

metadata.json: Contract metadata

flipper.wat: WebAssembly text format (human-readable)

Access polkadot.js

Select your target network

Upload contract files:

metadata.json for ABI

flipper.optimized.wasm for contract code

Follow the deployment wizard

Confirm deployment success

Need help? Join our Discord Community

Use TypeScript-aware IDEs for better development experience

Keep track of event IDs to avoid conflicts

Test thoroughly before deployment

Monitor gas usage and optimization

lib.rs: Contract implementation💡 Tip You can use Swanky Suite to quickly bootstrap a new project. Check out the Swanky CLI guide for details.

Your Cargo.toml should include the following sections:

Every ink! contract must include:

⚠️ Required Elements

no_stdattribute for non-standard library compilationContract module marked with

#[ink::contract]Storage struct with

#[ink(storage)]At least one constructor with

#[ink(constructor)]At least one message with

#[ink(message)]

The flipper contract is the simplest example provided by the ink! team, perfect for understanding basic contract structure.

Project Organization

Keep one contract per crate

Use meaningful names for contract modules

Organize tests in a separate module

Code Structure

Group related functionality together

Document your code with comments

Follow Rust naming conventions

Testing

Include unit tests

Add integration tests where needed

Use ink!'s testing utilities

Latest stable version of Rust

Install from https://rustup.rs/

Node.js

Version 14 or higher

Download from https://nodejs.org/

WebAssembly Target

Required for compiling Rust to WebAssembly

Install using rustup:

You have two options for installing and setting up WASP:

This is the fastest way to get started with a new WASP project:

This command will:

Create a new project directory

Set up the required project structure

Install necessary dependencies

Configure the development environment

If you prefer more control over the setup process, you can manually clone and configure the project:

Clone the repository:

Navigate to the agent directory:

Install dependencies:

Make the build script executable:

Start the development environment:

To verify that WASP is installed correctly:

Start the development environment:

You should see the UOMI Development Environment interface:

Available AI models can be found in the Models page, using different IDs in the call_ai_service call will cause the agent to crash and not work

After installation, you should:

Configure your development environment in uomi.config.json

Set up your AI model preferences (local node-ai or third-party services)

Familiarize yourself with the project structure

Try running the example agent

You have two options for AI service integration:

Follow the node-ai repository setup to run the production version locally. With this option, you don't need to specify URL or API keys in your configuration.

If you prefer using external services like OpenAI (This method does not guarantee determinism as the model result in tests, the model result may be different from the one in production), configure your uomi.config.json:

If you encounter issues during installation:

Rust Build Failures

Verify your Rust installation: rustc --version

Ensure WebAssembly target is installed: rustup target list

Node.js Issues

Check Node.js version: node --version

Verify npm installation: npm --version

Permission Issues

Ensure build script is executable

Check filesystem permissions

If you need assistance:

Check the GitHub repository

Submit issues for bugs or questions

Contribute via pull requests

WASP is an open-source project maintained by the UOMI team. For additional support or information, refer to the project documentation or reach out to the community.

# Download and install

curl https://sh.rustup.rs -sSf | sh

# Configure environment

source ~/.cargo/envrustup default stable

rustup update

rustup update nightly

rustup component add rust-src

rustup component add rust-src --toolchain nightly

rustup target add wasm32-unknown-unknown --toolchain nightly[toolchain]

channel = "1.69.0"

components = [ "rustfmt", "rust-src" ]

targets = [ "wasm32-unknown-unknown" ]

profile = "minimal"apt-get update

apt-get -y install binaryenpacman -S binaryenbrew install binaryenFind binary releases at https://github.com/WebAssembly/binaryen/releasescargo install cargo-dylint dylint-linkcargo install cargo-contract --force --lockedcargo install cargo-contract --force --version 1.5.1cargo contract --helppub fn log(message: &str)fn process_data() {

log("Starting data processing...");

// Process data

log("Data processing completed");

}pub fn read_input() -> Vec<u8>fn handle_request() {

let input_data = read_input();

let request = String::from_utf8(input_data).unwrap();

log(&format!("Received request: {}", request));

}pub fn save_output(data: &[u8])fn process_and_save() {

let result = process_data();

save_output(result.as_bytes());

log("Result saved successfully");

}pub fn get_input_file_service() -> Vec<u8>fn analyze_file() {

let file_content = get_input_file_service();

log(&format!("File size: {} bytes", file_content.len()));

// Analyze file content

}pub fn get_cid_file_service(cid: Vec<u8>) -> Vec<u8>fn fetch_ipfs_content() {

let cid = "QmExample...".as_bytes().to_vec();

let content = get_cid_file_service(cid);

log(&format!("Retrieved IPFS content: {} bytes", content.len()));

}pub fn prepare_request(body: &str) -> Vec<u8>pub fn call_ai_service(model: i32, content: Vec<u8>) -> Vec<u8>fn process_with_ai() {

// Prepare AI request

let prompt = format!("{{\"messages\": [{\"role\":\"user\",\"content\":\"hey\"}] }}");

let request = prepare_request(prompt);

// Call AI model

let response = call_ai_service(1, request);

// Process response

let result = String::from_utf8(response).unwrap();

log(&format!("AI response: {}", result));

}mod utils;

#[no_mangle]

pub extern "C" fn process() {

// Log start of processing

log("Starting agent execution");

// Read input

let input = read_input();

let input_str = String::from_utf8(input).unwrap();

log(&format!("Received input: {}", input_str));

// Prepare AI request

let prompt = format!("{{\"messages\": [{\"role\":\"user\",\"content\":{}] }}", input_str);

// Call AI model

let ai_response = call_ai_service(1, request);

let result = String::from_utf8(ai_response).unwrap();

// Save output

save_output(result.as_bytes());

log("Agent execution completed");

}mod utils;

fn process_file_content() {

// Get file content

let content = get_input_file_service();

// Process with AI

let request = prepare_request(format!("{{\"messages\": [{\"role\":\"user\",\"content\":{}] }}", String::from_utf8(content).unwrap()));

let summary = call_ai_service(1, request);

// Save results

save_output(&summary);

}npm install --global yarngit clone https://github.com/ask-lang/ask-template.git

cd ask-templateask-template/

├── asconfig.json # AssemblyScript config

├── askconfig.json # ask-lang config

├── build/

│ └── metadata.json # Contract metadata

├── flipper.ts # Contract code

├── index.d.ts # TypeScript definitions

├── package.json # Dependencies

└── tsconfig.json # TypeScript config// Event Definition

@event({ id: 1 })

export class FlipEvent {

flag: bool;

constructor(flag: bool) {

this.flag = flag;

}

}

// Storage Layout

@spreadLayout

@packedLayout

export class Flipper {

flag: bool;

constructor(flag: bool = false) {

this.flag = flag;

}

}

// Contract Logic

@contract

export class Contract {

// Contract implementation

}@spreadLayout

@packedLayout

export class Flipper {

flag: bool;

constructor(flag: bool = false) {

this.flag = flag;

}

}@contract

export class Contract {

// Constructor

@constructor()

default(flag: bool): void {

this.data.flag = flag;

}

// Mutable Method

@message({ mutates: true })

flip(): void {

this.data.flag = !this.data.flag;

let event = new FlipEvent(this.data.flag);

env().emitEvent(event);

}

// Read-only Method

@message()

get(): bool {

return this.data.flag;

}

}@event({ id: 1 })

export class FlipEvent {

flag: bool;

constructor(flag: bool) {

this.flag = flag;

}

}

// Emitting events

env().emitEvent(new FlipEvent(true));# Install dependencies and build

yarn && yarn build flipper.ts[package]

name = "my_contract"

version = "0.1.0"

authors = ["Your Name <[email protected]>"]

edition = "2021"

[dependencies]

ink = { version = "4.3", default-features = false}

ink_metadata = { version = "4.3", features = ["derive"], optional = true }

scale = { package = "parity-scale-codec", version = "3", default-features = false, features = ["derive"] }

scale-info = { version = "2.5", default-features = false, features = ["derive"], optional = true }

[dev-dependencies]

ink_e2e = { version = "4.3" }

[lib]

path = "lib.rs"

[features]

default = ["std"]

std = [

"ink/std",

"scale/std",

"scale-info/std"

]

ink-as-dependency = []

e2e-tests = []#![cfg_attr(not(feature = "std"), no_std)]

#[ink::contract]

mod my_contract {

/// Contract storage

#[ink(storage)]

pub struct MyContract {}

impl MyContract {

/// Contract constructor

#[ink(constructor)]

pub fn new() -> Self {

Self {}

}

/// Contract message

#[ink(message)]

pub fn do_something(&self) {

()

}

}

}rustup target add wasm32-unknown-unknown# Create a new UOMI agent project

npx wasp creategit clone https://github.com/Uomi-network/uomi-chat-agent-template.gitcd uomi-chat-agent-template/agentnpm installchmod +x ./bin/build_and_run_host.shnpm startnpm startUOMI Development Environment

Type your messages. Use these commands:

/clear - Clear conversation history

/history - Show conversation history

/exit - Exit the program{

"models": {

"1": {

"name": "gpt-3.5-turbo",

"url": "https://api.openai.com/v1/chat/completions",

"api_key": "your-api-key-here"

}

}

}XC20 asset refers to interface it uses to wrap around an asset in assets-pallets. So first we will need to create, mint and set metadata for an asset in assets-pallets and then access it from smart-contract using XC20 precompile interface.

This section of the guide will demonstrate how to register an asset using Polkadot.js Apps.

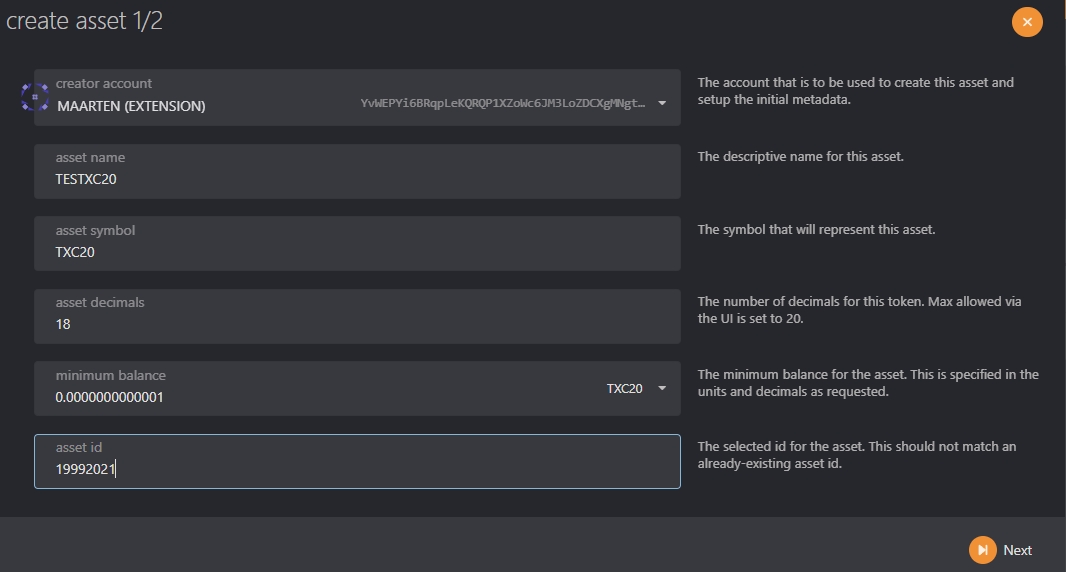

Before the asset's Metadata can be set, we will need to create an asset on the network using the following steps:

Navigate within Polkadot.js Apps to Network and click on Assets.

Click on + Create on the right to open the create asset pop-up.

Enter the asset name, asset symbol, and set the number of decimals for the asset. This doesn't have to be 18 decimals like the network native assets, it's completely configurable.

The minimum balance is the Existential Deposit (ED) of your asset. The ED exists so that accounts with very small balances, or that are empty, do not "bloat" the state of the blockchain and diminish its performance. NOTE: setting this value to pico units and minimum balance to 1, will only require 0.000000000001 units. We suggest having a minimum balance of 1.

The asset id will be automatically generated for you. The valid range for permissionless creation is up to 2^32 - 1.

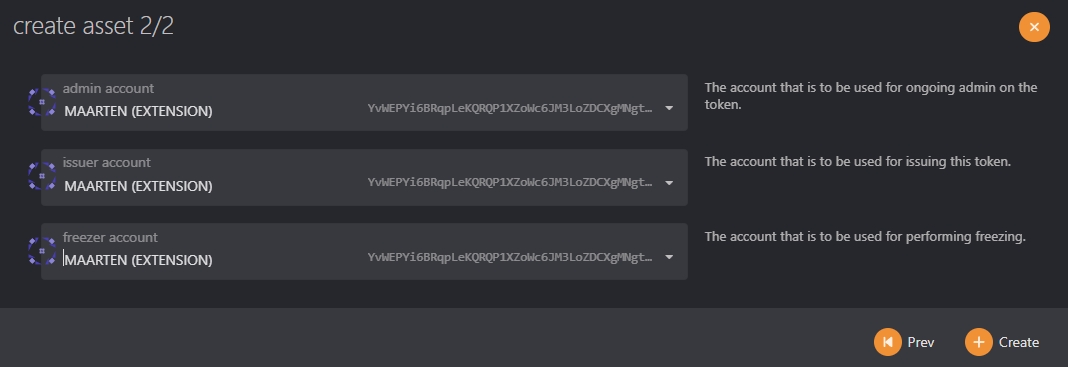

When everything is filled in, click Next on the next screen.

Set your roles and create the asset by signing with the creator account.

There are a few roles that are important to take note of when registering and managing assets. These roles, with the exception of the creator, can all be designated to other accounts by the owner via the assets -> setTeam extrinsic. The roles are as follows:

Creator - the account responsible for creating the asset.

Issuer - the designated account capable of issuing or minting tokens. Defaults to the owner.

Admin - the designated account capable of burning tokens and unfreezing accounts and assets. Defaults to the owner.

Freezer - the designated account capable of freezing accounts and assets. Defaults to the owner.

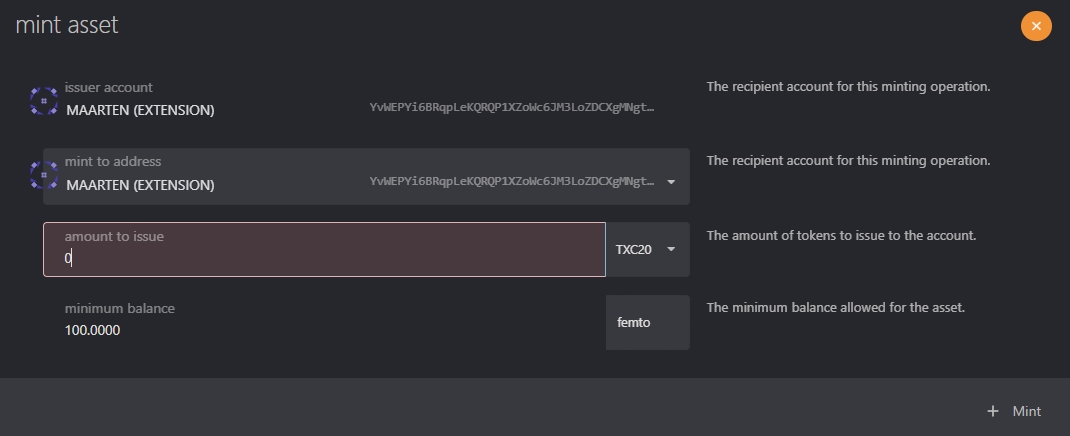

The asset is now created on our network, but has no supply. To mint the tokens, click on the +Mint button next to the asset to open the mint pop-up.

Only the issuer account has permission to mint the token.

Enter the address that will receive the minted tokens. We recommend using a multisig.

The metadata includes the asset name, symbol, and decimals.

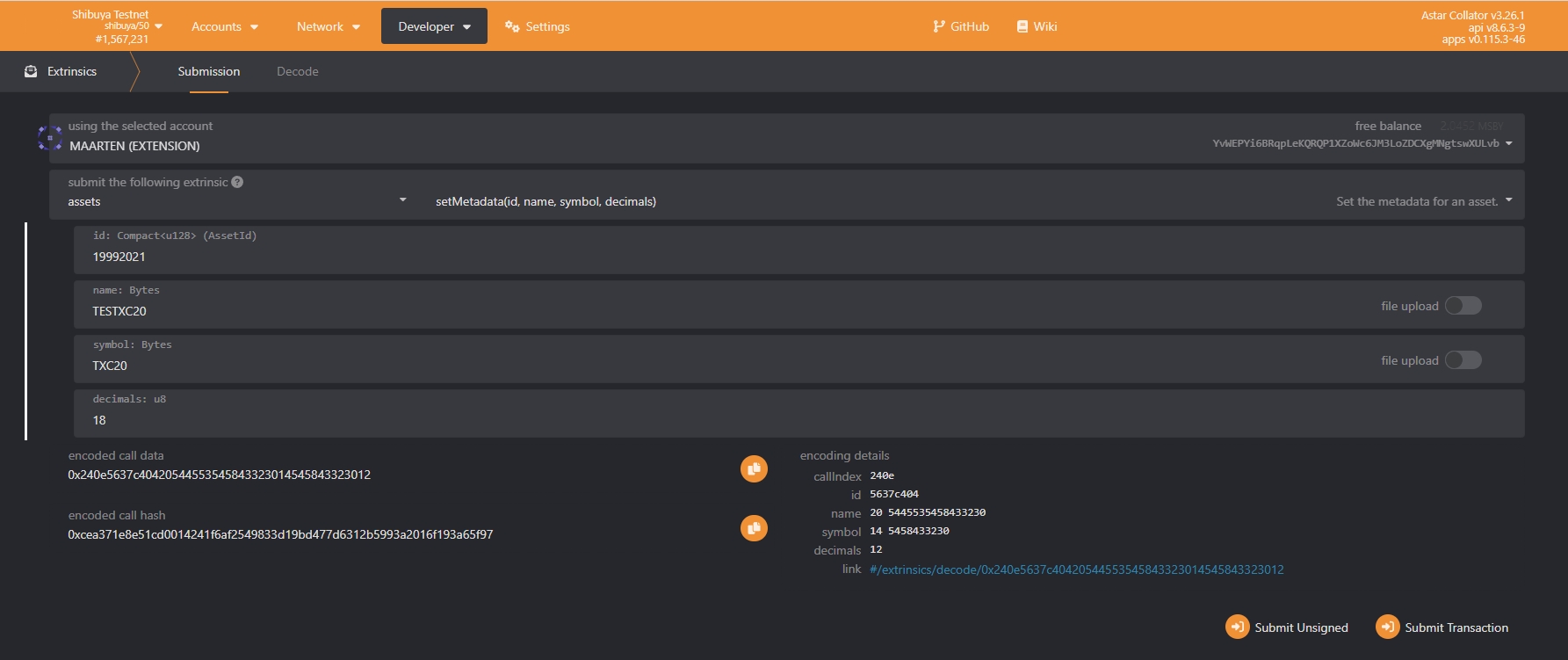

To set the asset metadata, click on Developer at the top of the page and then select Extrinsics from the dropdown. From there, take the following steps:

Select the owner's account

From the submit the following extrinsic dropdown, choose assets

Then select the setMetadata extrinsic

Enter the asset id from the asset you created before

Enter the name of the asset

Set the symbol for the asset

Set the decimals for the asset

Click on Submit Transaction

You can use the Extrinsics page to perform other functions such as minting tokens, delegating a team, freeze and thaw assets or accounts, and more.

To access our asset as XC20 in MetaMask or another EVM wallet, we will need to use its precompile address. The XC20 precompile address is using the following rule:

address = "0xFFFFFFFF" + DecimalToHexWith32Digits(AssetId)

The first step is to take the asset Id and convert it to a hex value. You can use the search engine of your choice to look up a simple tool for converting decimals to hex values. In this tutorial, we will use this decimal to hexadecimal converter.

For asset ID 19992021, the hex value is 1310DD5.

XC20 precompiles can only fall between 0xFFFFFFFF00000000000000000000000000000000 and 0xFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF. As such, the first 8 characters of the address will always be FFFFFFFF. Since Ethereum addresses are 40 characters long, you will need to prepend 0s to the hex value until the address has 40 characters.

The hex value that was already generated in the example is 7 characters long, so prepending the first 8 characters, FFFFFFFF, to the hex value will give you the part of the 40-character address you need to interact with the XC20 precompile. Note that you still need to add zeros to get the 40-character address. You add the zeros between FFFFFFFF and generated hex.

For this example, the full address is 0xFFFFFFFF00000000000000000000000001310dD5.

Now that you've generated the XC20 precompile address, you can use the address to interact with the XC20 as you would with any other ERC20 in Remix.

In this guide, we will use the binary provided in Uomi release.

If you have experience with Rust compilation, you can also build the binary from the repo.

⚠️ Minimum Hardware Requirements

RAM: 16GB

Storage: 500GB

CPU: 8 cores

Good network connectivity

Before starting the installation, ensure you have:

Ubuntu 20.04 LTS or higher

Downloaded the uomi bin and genesis file from:

Get available peers at

Root or sudo privileges

Create necessary directories and user:

Add copied peers inside the genesis.json file:

Then install binary and genesis files:

Create a systemd service file at /etc/systemd/system/uomi.service:

Check node status:

View logs:

You can verify your node is running correctly by: